"Why didn't testing find this issue?" Because you desire something that doesn't exist!

After 15 years in software testing, this is still a topic I'm dealing with way too often: people who have a completely misguided understanding of what testing can and cannot do.

In the year 2025, too many people think testing is:

- a phase, not a continuous activity that never ends;

- after you "do the testing" you shouldn't find new issues because you "have tested" (the phase is over).

- when new issues are found after testing, you can blame the testers for not delivering perfect work. Isn't it their job to find all the bugs?!

Basically, too many people don't know what the main goal of testing is, and what the limitations of testing are.

We test because we are imperfect, and cannot blindly trust the output of our work. With testing, we aim to find crucial information that threatens the value of our product and then we decide what to do with that information. Especially that last bit should be drilled into your skull: if you don't act on the information you find, then you have not closed the loop and you should ask yourself why you were testing that bit. Testing costs time and therefore money, so we all should be aware of opportunity cost.

The core of testing work

The core activity of testing is of the cognitive variety; pushing the buttons to execute a test case is therefore less important than many people make it out to be. Designing the test case is an important part of the work: why test this, and not something else? What risk do you cover, and why does it matter? What do you hope to gain, information wise?

Not one person is equiped to design all the valuable tests because we all have our blind spots. Testing has strong connections and roots in psychology, and we benefit from diversity in thinking to improve our testing. That's why testing shouldn't be done by one person, but by the whole team.

Here's where the first misguided thought often emerges: many people do think that one person can be responsible for the majority of test work: Designing the tests, executing the tests, reporting about the tests.

They don't see the flipside of this: that having just one person do all this work ignores the fact that they suffer from something we all suffer from: cognitive biases. It's wrong to view testing as a "task to be completed, assigned to one person" and not "a continuous activity that benefits from many people knowing what to do, and doing the things that need to be done".

Testing can be a role, but it's better when everyone involved in the software creation process develops at least some skills that belong in the testing category. Someone with the tester role should be working to improve the testing capabilities of people in their team and beyond.

Why does testing miss issues?

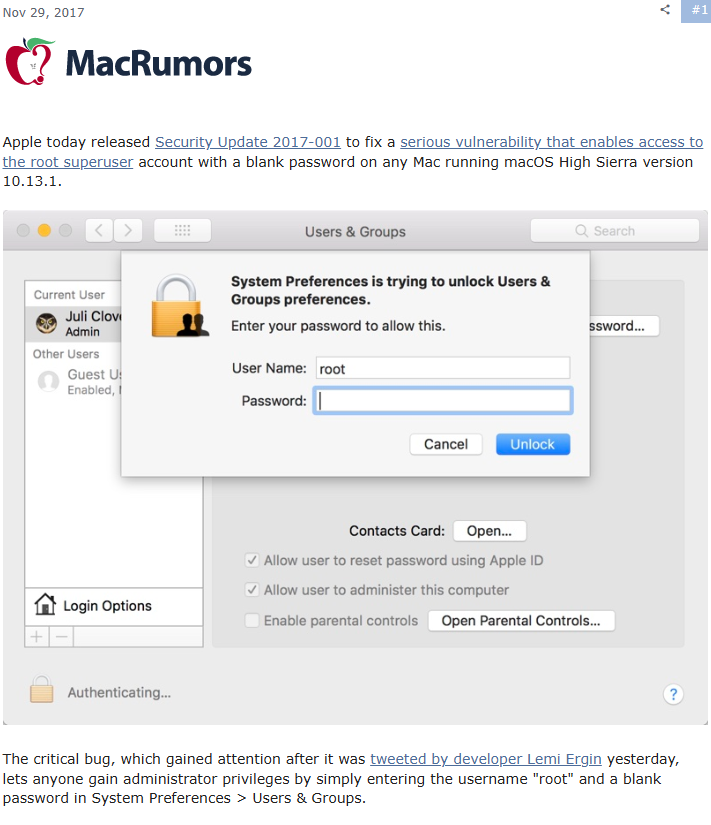

If I had received a dollar everytime people said "how did QA miss this?!" whenever a bug in a heavily used and popular product was found in production, I would have been rich by now. Example that I remember, the infamous root bug in Mac OS High Sierra:

And I saw many comments like this at the time:

I am sure there is extensive testing done on new versions of Mac OS, and everyone involved still totally missed this issue.

Sure, I should keep an open mind to the fact that testers could have done shoddy work, but I think another option is more likely.

It's impossible to test everything, there are simply too many test cases while there's too little time. And also, like I said before, every human has blind spots. Before a bug slipped through to production, how many people have missed it? That's not just on the testers, and if you think it is, you are still severely misguided on how testing works.

To quote Jerry Weinberg: "Testing can reveal the presence of bugs, not their absence.".

It is not possible to test everything, that's why we:

- let risks that threaten the value of our product guide our testing efforts

- seek input from people who matter in the process of creating, maintaining and improving the product

- diversify our testing, to increase the chances of finding worthwile information

- be very mindful of the opportunity cost: what is worthwhile to test (interesting conditions we identified) and what do we have time for to test (reduce the test set to a manageable and affordble level)?

Based on these points, if you apply a little bit of brainpower, you can see that this is indeed not 100% testing. It is not bug-proof, not perfect. A risk-free software release doesn't exist! I am sure Apple added a test case for the specific issue after it was found in production, but there have still been different bugs in newer Mac OS releases. That's how the cookie crumbles.

Again, that doesn't mean that testing is always done well (it can be done shoddily, or skipped altogether), but it's not an either/or clean story.

But please, stop blaming (only) testers when a bug surfaces in production. (Ideally, you skip the blame game altogether, especially if you have little context).

Stop thinking that it is out of the ordinary for new information to surface at a timing you consider "too late". Testing is never done, it is not a phase you do only once and then you have complete confidence that everything will be fine.

Heck, I'd even argue that it's the opposite: even with extensive testing, you can be sure that there is still worthwhile information to be found (bugs, risks, you name it).

The solution is to welcome new information, even when it is something that doesn't make you happy or perhaps a shitty situation in production, and invest in a process to quickly fix and roll-out a new release. Problems are so much worse if you cannot quickly roll out a fix because your infra sucks ass (this is what I mean with "my own twist", I don't think Jerry would have chosen these words lol). If your platform is up to par with modern standards, you use CI/CD, observability, all that good shit, it's much less of a big deal.

2026, the year when we stop believing in fairy tales?

This is my wish for 2026.

It's the year when people finally understand the powers and limitations of testing:

- it's not a phase, but a continuous activity.

- testing is always allowed to uncover new information, even when it is judged as "too late". Better late than never. By all means, investigate if the "too late" part was a rightful critique and investigate whether it can be improved, but if not, move on and don't blame testing only. Many more people have missed the info that you're blaming on "bad testing"!

- accept the fact that you will find problems in production, that testing cannot be done perfectly. Improve your delivery pipeline so bugs can be quickly fixed, at least.

Will my wish come true? No, of course not, but a girl can dream.

Comments ()