The Best in Test meet-up recap

I had a great time last Tuesday evening at The Best in Test event, organised by Sopra Steria. When I came in at around 5PM, I saw Bas Dijkstra and we were both like, "we haven't been to a testing meetup since...forever?!". This must be a left-over consequence from the great panini period; there seem to be fewer testing meetups these days?

Something else I noticed: where were the young professionals? It seemed like the average age was close to my own: middle-aged people. Do younger generations not like going to meet-ups? Anyone know what's going on here?

Anyway, I had a nice time catching up to people during dinner, and at around 6PM the talks started.

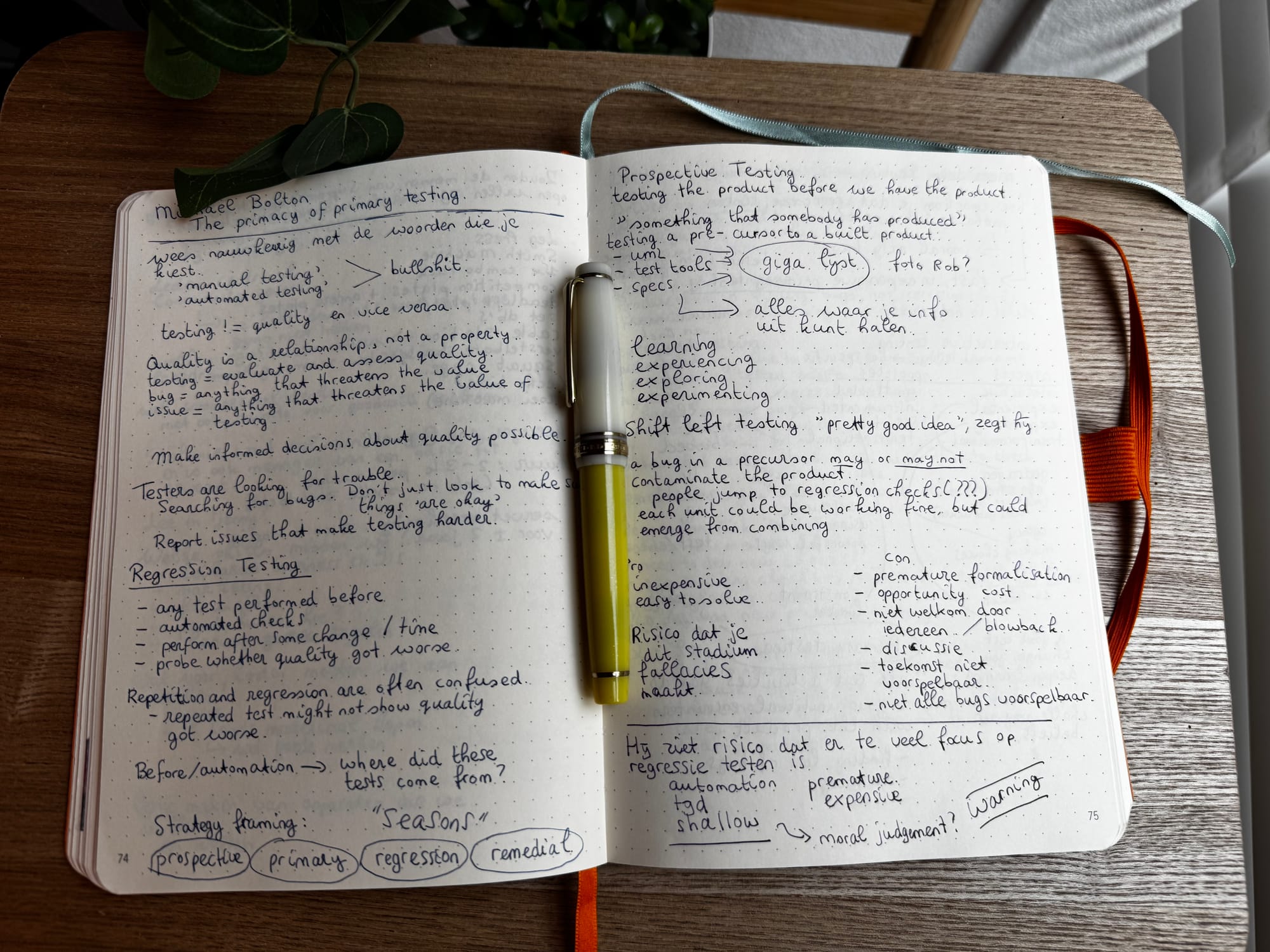

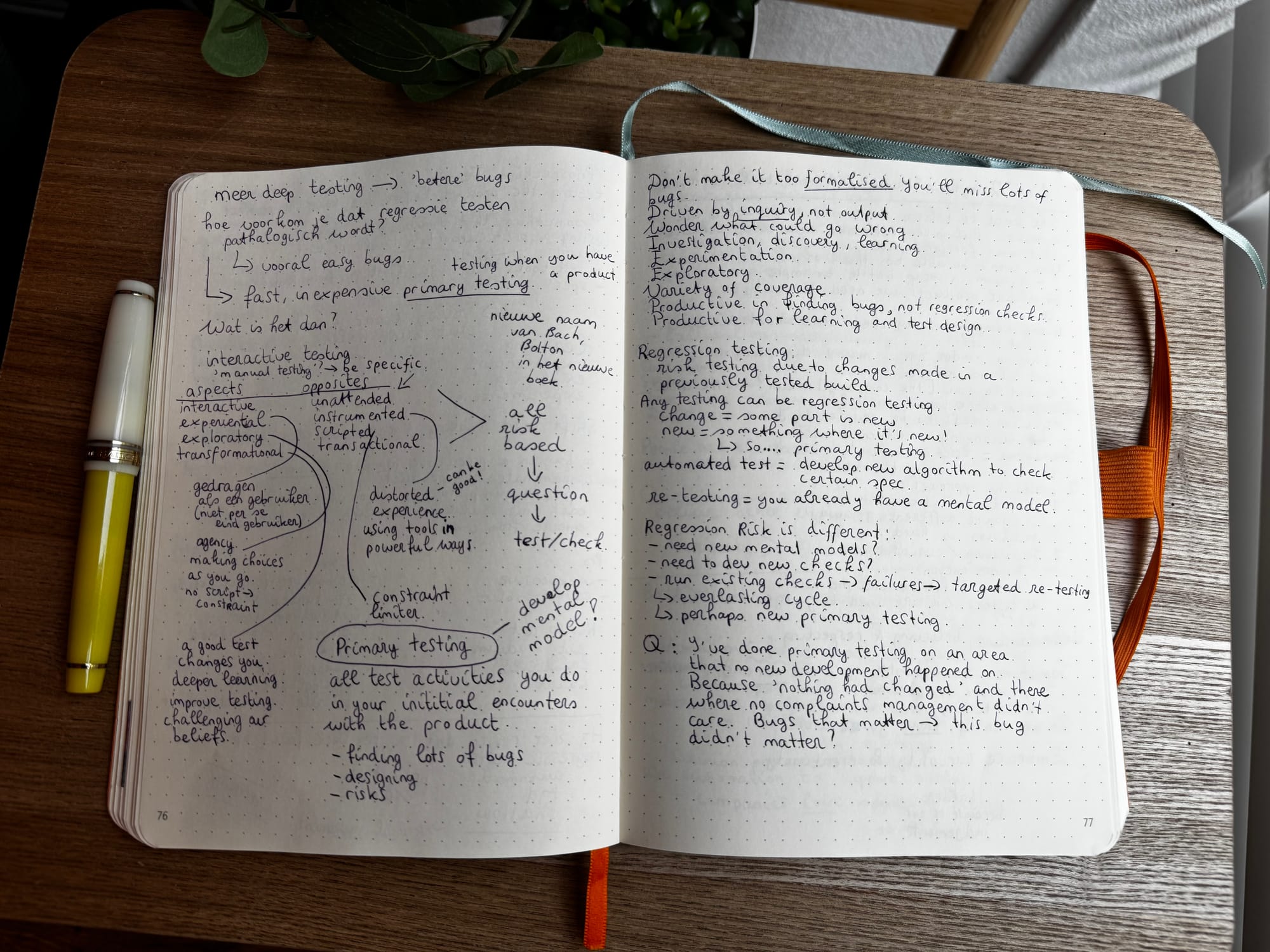

Concentration hack: take notes, using pen and paper!

Michael Bolton kicked off the evening, with a talk about The Primacy of Primary Testing. I had a hard time keeping up with my note-taking, as the densitify of information per spoken sentece was high, but I did my best.

Here are the photo's of my notes. If you can't read Dutch, you'll have a harder time reading them, I'm sorry. This is a consequence of my brain being totally fucked up from speaking two languages a lot. I think in both languages, which is as annoying as it sounds.

I enjoyed the talk a lot, and I'm looking forward to read the upcoming book from Michael and James.

I have a couple of reservations, which I hope will be addressed in the book. The whole model of seasonal testing makes sense to me, but in my current context I have to teach people, who aren't testers, how to test. I don't think they have enough mental models about testing available yet to make sense of these terms: preliminary testing, primary testing and regression testing. It's up to me to get them there if needed, but I wonder how well the model translates into practice.

The people I'm working with already had their minds blown when I told them that testing isn't only happening when you interact with the software, but also when you ask questions, challenge assumptions, e.d. (what Bolton calls preliminary testing). I'm just at the stage where I teach and show them that all testing should be risk-based, and that they have to choose where they want to direct their testing efforts, as we are always dealing with limitations in time and budget.

The seasonal model is very useful for me, though, as it has spurred on lots of new thoughts, questions, ideas. Thinking is my hobby, so yay!

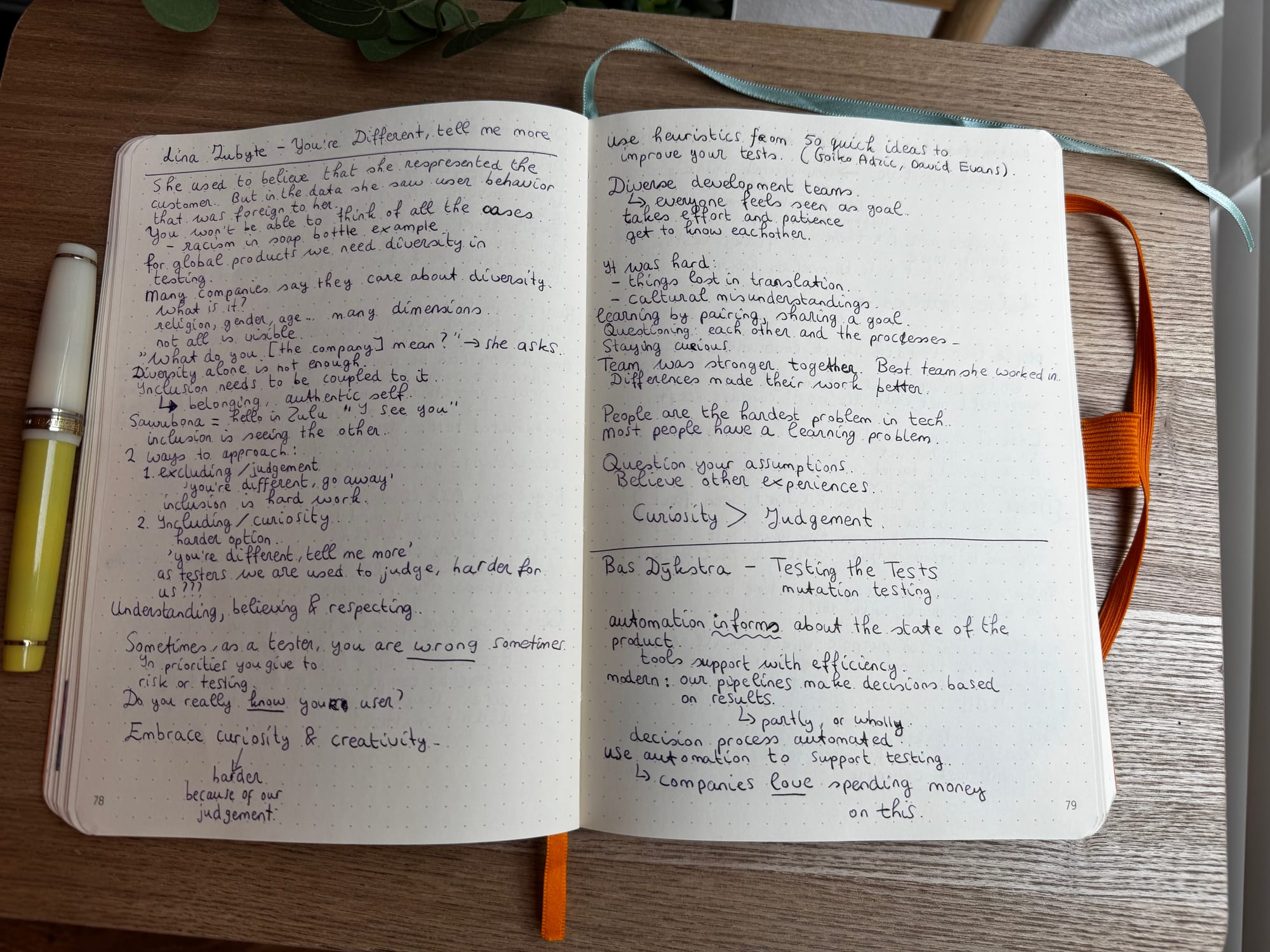

The next talk was by Lina Zubyte: You're Different, Tell me More.

As a tester, you cannot ever represent all your users. Some people have needs, or use the application in ways that you can never think of. Lina saw this in the data from production, and had this epiphany. She used to think she, as tester, represented the user, but this experience taught her otherwise.

Sometimes you are wrong about what you think is important in the product. Do you really know your user? Lina showed us a few examples of how the product she was working on (a healthcare product) was used in practice, and it made her re-think her approach.

The lesson that I want to repeat here is this: Believe other people when they tell their experiences, don't be skeptical as a default reaction.

Let curiosity win over judgement.

Bas Dijkstra closed out the evening with a talk about Mutation Testing.

Even though he is a test automation expert, he understands that automation is supporting testing as a whole.

In fact, even though companies love to blow money on automation efforts (good for his business, aye!), automated tests can deceive us.

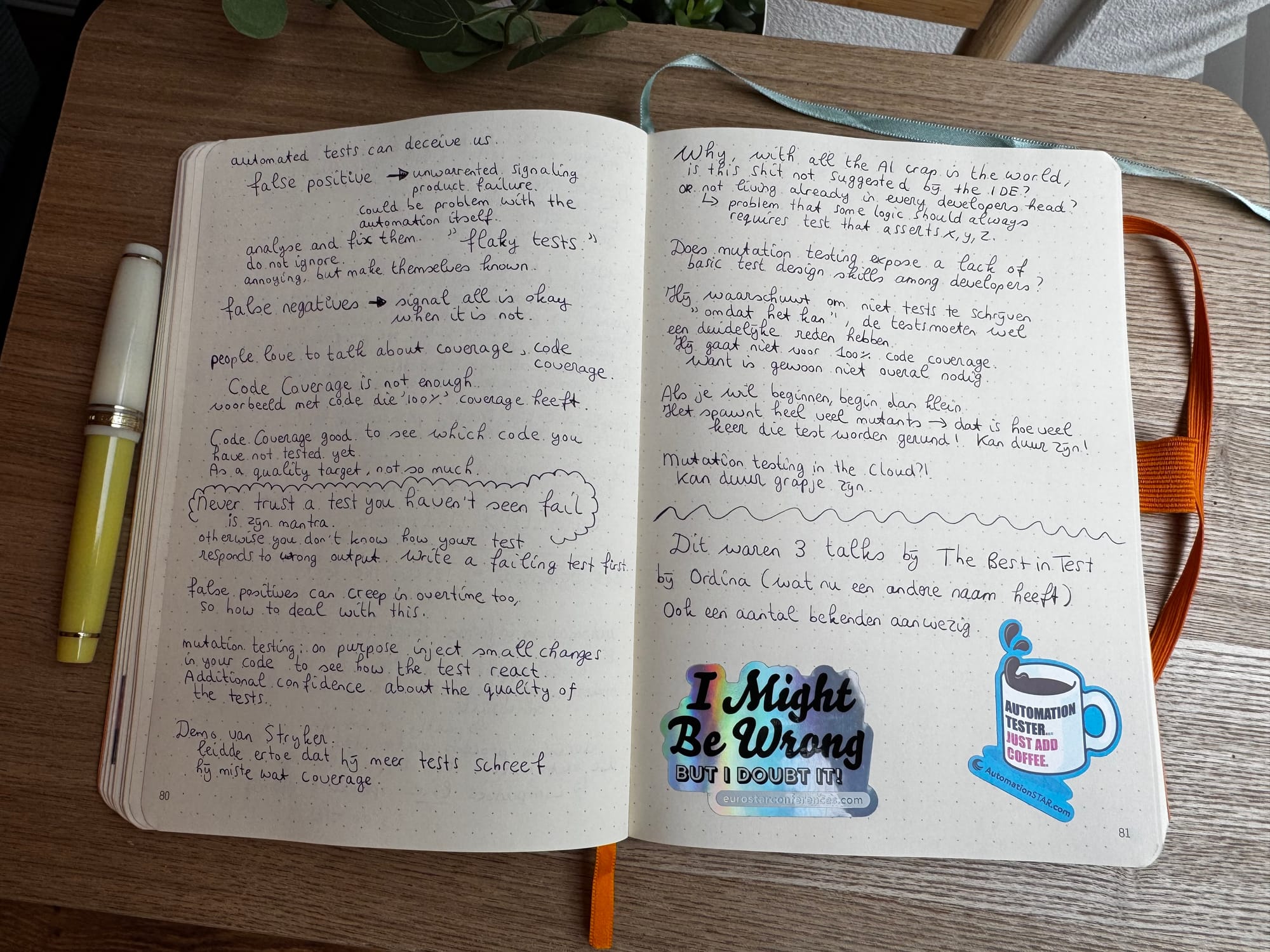

There are false positives, which you could call "flaky tests" a lot of the time. You shouldn't ignore those! The good thing about false positives is that at least they make themselves known!

False negatives are scarier. The tests are green, but a problem is hiding! Code coverage is not enough of an insurance policy against this, this is where mutation testing comes in. Test the quality of your tests.

NEVER TRUST A TEST YOU HAVEN'T SEEN FAIL. Typing this in caps because it's so important. Know how your tests responds to input that should make it fail!

With mutation testing, you inject small changes in the code to see how the tests react. There are libraries for this, for C# there is Stryker. Bas showed us a demo on how he used this tool in his own project (a port of Rest Assured test library to C#). Mutation testing showed him where he could improve his code and change/add some tests to safeguard against fale negatives.

The goal is not to get 100% mutation test coverage, Bas warned us against the allure of adding tests for the sake of adding tests. I cannot stress this enough: just because it's easy to add tests, please always be mindful that testing should be risk-based. All code needs to be maintained, so don't needlessly add test code if there isn't a clear reason.

The question I wrote down in my notes though, is: Does mutation testing expose a lack of basic test design skills among developers? I mean, let's be honest, there are probably existing heuristics which kind of unit tests you should write to test certain logic statements to safeguard against false negatives.

Running these mutation tests could also be an expensive affair that makes Cloud Providers very happy. Each mutant needs to run the test again, and a modest amount of code could already spawn hundreds of mutants. The cost aspect should therefore not be overlooked.

The end of the talks! If you think my handwriting is nice, this is just writing at normal speed, so my normal writing. This isn't magic, it's just plain old practice.

What I loved about this evening:

- catching up with people, meeting new people

- listening to three wildly different talks

- writing notes with my fountain pen and being able to concentrate because of this choice.

What I didn't like about this evening:

- the chairs were extremely uncomfortable xD

- the temperature in the room was 26,5 degrees C (yes, I checked!), slowly melting my poor brain

- that it was over at 22:00! I had such a great time

Comments ()